GPU Computing

As well as boasting about massive improvements in 3D performance each generation, Nvidia has been pushing the more general purpose characteristics of its graphics chips ever since the release of its G80 graphics processor.G80 was a product that changed the way Nvidia (and much of the industry for that matter) thought about graphics cards, as they could no longer be just used for just painting pretty pixels on your monitor.

Its CUDA architecture enabled its GPUs to run applications written in C for CUDA, which enables developers to scale across the hundreds of stream processors in Nvidia’s high-end GPUs. Essentially, the more parallelisable the application is, the faster it’ll go.

Nvidia has had a reasonable amount of success with this, but the real traction came when both Apple and Microsoft decided that their next generation operating systems would make extensive use of GPU acceleration to speed up highly parallel tasks that were traditionally run on the CPU.

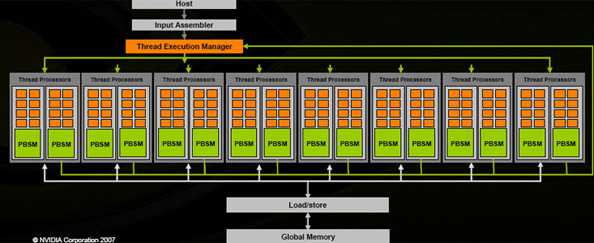

Nvidia's G80 graphics processor in Compute Mode

However, the company is yet to make a lot of money from its GPU Computing endeavours. The problem we’ve had up until now is that applications written in C for CUDA are limited to Nvidia’s GPUs and so they’re not especially portable at the moment.

We don’t want to take anything away from the developers who have moved their applications to the GPU and achieved some excellent performance improvements, but most of the apps are fairly low profile in the grand scheme of things. Of course, we must make an exception for Adobe’s Creative Suite (which uses OpenGL and not CUDA, incidentally) – the real traction will come when more of the big boys start accelerated parts of their applications with the GPU.

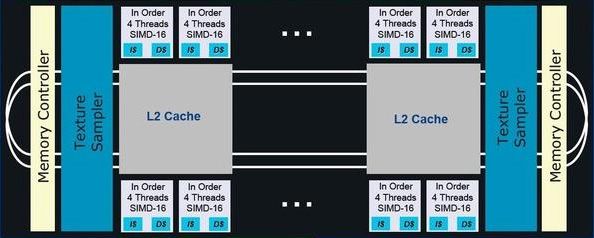

An early diagram of Intel's Larrabee many-core processor

We don’t expect this to happen in the near-term future, because while the principles of programming on the GPU remain very much the same – C for CUDA is just C with a few extensions – there’s a completely different mindset to developing a highly parallel application that runs well on the GPU. We get the feeling that developers are also waiting for standards – they’re not interested in their applications only working on half of the discrete GPUs in the world.

With that in mind, there’s no doubt there will be traction eventually though given the recognition that both Apple and Microsoft have given to GPU Computing with OpenCL and DirectX Compute – as well as the inspiration that both have taken from Nvidia’s C for CUDA programming model. However, we believe that significant revenue in the form of increased GPU sales won’t start until at least 12 months from now.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.